© 2024 The authors. This article is published by IIETA and is licensed under the CC BY 4.0 license (http://creativecommons.org/licenses/by/4.0/).

OPEN ACCESS

Rice (Oryza Sativa) is a plant that is an important commodity for many people in Asia who consume a lot of rice. In the process of cultivating rice, failure often occurs due to the influence of climate or disease. Several types of diseases attack rice plant leaves, including hispa, brown spot, and leaf blast. Therefore, a system is needed to detect diseases that attack rice leaves. Early identification of these illnesses is vital for a variety of reasons: (1) Prevention of Spread: Prompt detection enables immediate intervention, thereby thwarting the transmission of diseases to viable plants. (2) Mitigated Yield Decreases: With early management, the adverse effects on crop quality and yield can be substantially diminished. (3) Cost-Effective Management: Prompt intervention can diminish the necessity for expensive and time-consuming pharmaceutical treatments. (4) Sustainable Agriculture: The promotion of integrated pest management strategies and the reduction of reliance on chemical controls are all ways in which effective management supports sustainable agricultural practices. The method that can be used to create a detection system is to apply deep learning methods. YOLOv8 is a deep learning model that is currently popular in the field of object detection compared to several other deep learning models. The YOLOv8 model undergoes comprehensive training on a dataset comprising four classes: heath, hispa, brown spot, and leaf blast. It is then optimized for object detection, which is modeled as a convolutional neural network (CNN). The performance of the YOLOv8s.pt model yields an average accuracy of 97%, which can be considered a good result in experimental terms. Model training uses Google Collaboratory pro using a Tesla T4 GPU, so it helps in detecting objects well.

YOLOv8, deep learning, rice leaf disease, CNN, classification, Google Collaboratory, object detection

In the development process of the rice plant (Oryza Sativa), [1] disease is one of the factors that cause growth and can cause plants to die. Rice leaf diseases are a significant and widespread problem affecting rice cultivation globally, particularly in Asia, where rice is a staple food and a critical component of food security. Diseases such as bacterial blight, rice blast, and brown spot pose severe threats to rice production, leading to substantial yield losses and economic damage. These diseases can spread rapidly, often exacerbated by favorable environmental conditions and insufficient early detection methods. The prevalence and severity of rice leaf diseases necessitate efficient and accurate diagnostic solutions to ensure timely intervention and mitigate their detrimental impact on food security and the livelihoods of millions of farmers. So if there is a decrease in the amount of rice production due to rice harvest failure due to leaf disease, it will certainly be very detrimental [1, 2]. Farmers who are late in recognizing the type of disease that attacks rice plants can cause the disease to spread rapidly or if they carry out the wrong treatment it can result in rice damage due to giving the wrong medicine due to incorrect handling and diagnosis of the type of disease. Several types of diseases often attack rice plants, namely rice blast and brown spot. If you look closely at these two types of disease, they look similar but have different causes. so that the introduction of disease can have a big impact because of damage to rice which causes crop failure [3, 4].

This has a huge impact on the treatment process carried out by farmers if they do not know that the plants are being attacked by pests [5] due to the many pest problems that attack rice plants, various studies have been carried out to increase the resistance of rice seeds so that they are disease resistant [6]. Apart from that, there is another way, namely by using technology that has been developed to identify and classify diseases on rice leaves, namely using image recognition with deep learning [7].

Recognition of rice diseases is usually carried out in in-depth learning because the characteristics of diseases that attack rice plants are spots that appear which have certain shapes and color patterns so that the shape and color can be detected [8] with CNN. CNN is a deep learning algorithm in which images serve as input to be studied by an artificial neural network and then a process to analyze various aspects of the image and be able to differentiate one from another [9]. The CNN algorithm is currently very popular among Deep Learning users because the most important factor is in terms of extracting features that can be trained based on task suitability so that it can recognize a new object in an existing network [9, 10]. In this research, we use the YOLO (You Only Look Once) model, using the newest series, YOLOv8, which is one of the CNN models [11] which was developed from the previous YOLOmodel using YOLOv5 as a development base for the YOLOv8 model because the v5 model has accuracy, speed and is easy to use with a concept design where the detection performance is better than the previous model [12].

This study will explain the identification and classification of diseases in rice leaves using the YOLOv8 model. This paper will explain in several parts what is expected to have a significant impact. First, a deep learning model has just been released that can detect objects and classify diseases that attack plants. Second, this research will also look at the performance of the YOLOv8 model by reading various related references as well as various deep-learning models and methods for detecting diseases in rice plants. Third, carry out trials using the dataset that has been prepared to find the best results using YOLOv8-n, YOLOv8-s. fourth, hold discussions related to the research being carried out by comparing it with several previous studies. Fifth, make final conclusions regarding what has been done.

2.1 Detection and classification rice leaf diseases with deep learning

In this research, in-depth learning was used to detect and classify diseases that attack rice plants. In terms of object detection, the use of deep learning is very often used, especially CNN, which has been widely used because it has been tested when used in detecting images so it is very suitable when used to detect diseases in rice leaves. The application for detecting leaf diseases in rice plants has been carried out in several studies to look for types of models that are good for detecting and classifying rice leaf diseases, so there is a need for comparison in the explanations related to models and experiments on rice leaves in several articles to find the best model for Detection and classification.

Costales et al. [13] performed image augmentation to increase the number of image samples and improve the accuracy of the neural network. Consequently, the modified neural network achieved high throughput with accuracy approaching human-level performance. Research has developed a prototype application for Rice leaf disease detection using CNN for local farmers [6]. In another study conducted by Wang et al. [4], trials were conducted for classification and detection using the Attention Based Neural Network and Bayesian Optimization (ADSNN-BO) model, which is based on the MobileNet model structure and augmented attention mechanism. By testing the model they developed, they obtained the following scores: F1: 89.6%, Precision: 92.6%, Recall: 87.4%, and Test Accuracy: 94.6%.

Meanwhile, according to Haque et al. [2], using the YOLOv5 model resulted in significantly improved accuracy and F1 scores compared to previous models (ResNet v2, SVM, VGG16). The experiments conducted yielded the following values: F1: 86%, Precision: 90%, Recall: 67%, and mAP/AP: 81%. YOLO imposes strong spatial constraints on box prediction restrictions because each grid cell only predicts two boxes and can only have one class. This spatial constraint limits the number of nearby objects our model can predict. Our model struggles with small objects that appear in groups, such as flocks of birds [8]. This is due to the evaluation of deep learning-based systems on model performance, and different metrics such as accuracy, precision, recall, etc. used in existing studies. This technology aims to overcome the challenges of early diagnosis and management of complex plant diseases [9].

Anwar et al. [14] state that YOLO is a Deep Learning model that excels in object detection compared to other methods. YOLOv7, the latest version of the YOLO architecture, is characterized by its rapid detection speed, high precision, and ease of training and implementation. Using YOLOv7 which concentrates on preprocessing, data augmentation, annotation, and object labeling gets good results as evidenced by visible performance evaluations [9]. The dataset or data itself is very important in conducting trials on various types of case studies. The dataset for training instance segmentation is different from ordinary object detection. The labeling process for instance segmentation datasets must determine precise object areas with existing objects, not just by marking areas where objects are likely to exist [15]. Block CNN has 2 conventional layers with 3 kernels. In this layer padding and activation are not applied. The results from the two conventional layers will then be fed into the leaking ReLU [16]. Then 1 layer with maximum pooling with 2 times 2 kernels. The idea of using two consecutive convolutional layers with 3 by 3 kernels comes from the VGG structure which suggests using smaller filters on top of each other having the same receptive field as the larger filter despite having fewer trainable parameters compared to the filter the greater one [11].

So to overcome the problem of rice plant leaf disease, a technique that combines CNN object detection with image tiling techniques, is based on automatically estimating the size of the rice leaf width in the image as a size reference for dividing the original input image [17]. This is also because Deep Learning is developing in the early diagnosis and management of diseases in plants, this system uses deep learning techniques based on vision. To expand its use, it needs to be combined with other algorithms [18]. Compare with the YOLO model, ADSNN-BO, and other models, in the relevant reference journals to pay attention in detail and in detail, in order to provide a type of proof of the trials carried out. So the YOLO model that will be used in this research sees a good level of precision and accuracy, resulting in a type of model that is good at detecting and classifying rice leaf diseases.

In this research we took data from kaggle.com, the data obtained was then processed using data augmentation techniques, such as rotation, flipping, scaling, and random lighting changes, applied during training to make the model more robust to variations. Alignment of objects in the image is also performed through detection and cropping of relevant areas to ensure consistency in object position and scale. Additionally, grouping the data based on shooting conditions and using color correction helps standardize the dataset.

Rice leaf disease detection offers several benefits for both farmers and the agricultural industry as a whole. These benefits include: (1) Early Disease Detection: Detecting diseases in rice leaves at an early stage allows for prompt intervention, reducing the spread of the disease and minimizing crop damage. (2) Increased Crop Yield: By identifying and treating diseases in their early stages, farmers can prevent significant crop losses, leading to higher yields and improved food security. (3) Cost Savings: Early disease detection can save farmers money by reducing the need for extensive pesticide or fungicide applications. It also minimizes the cost of crop loss due to diseases. (4) Sustainability: Reducing the use of pesticides and fungicides is environmentally friendly and contributes to sustainable farming practices, as it reduces the environmental impact of chemical inputs. (5) Data-Driven Decision-Making: Rice leaf disease detection often involves the use of technology, such as machine learning and image analysis. This data-driven approach helps farmers make informed decisions about disease management. (6) Precision Agriculture: Implementing disease detection technology is a part of precision agriculture, which optimizes resource use and minimizes waste by targeting treatment only where it's needed. (7) Reduced Human Error: Automated disease detection systems are less prone to human error and can provide consistent and accurate results, improving the reliability of disease diagnosis. (8) Disease Monitoring: Disease detection technology often includes monitoring features, allowing farmers to track the progression of diseases over time and adjust their management strategies accordingly. (9) Enhanced Food Security: By reducing crop losses and increasing yields, rice leaf disease detection contributes to global food security by ensuring a stable supply of rice. (10) Research and Development: The data collected from disease detection efforts can be used for research into disease resistance, the development of new crop varieties, and the creation of more effective disease management strategies.

2.2 YOLOv8 architecture

The structure of the YOLOv8 model which is the latest version of the YOLO model [19]. This model is also a development of the previous model, namely YOLOv5, which was the basis for development [20], The new YOLOv8 model was released in 2023 with several model types for detection, segmentation, classification and pose [21]. Figure 1 displays a comparison image of the YOLOv8 model.

Figure 1. YOLO model comparison

The YOLOv8 model adapts the backbone framework of YOLOv5 by making several changes to the CSPLayer. After being changed, it was renamed the C2F module. The C2F (cross-stage partial bottleneck with two convolutions) module is a model that combines high-level features using contextual information to improve detection accuracy [22]. Therefore, the degradation flow model is enriched by connecting more branches between layers. Thus, a larger feature representation is activated. The C2f module improves feature representation through dense and redundant structures, varying the number of channels through scale factor-based split and merge operations to reduce computational complexity and model capacity [23, 24].

Bounding boxes as annotations are very popular in the field of deep learning, outperforming other types of annotations in terms of frequency [25]. Because a grid cell is responsible for identifying the objects whose centers lie within that grid cell. The boxes and confidence ratings are predicted for each box [19]. If the model is sure that the box contains an object, it will give a high confidence value [26]. In the field of computer vision, the term "bounding box" refers to a rectangle used to describe and determine the precise spatial coordinates of an object under study. The coordinates of the top left and bottom right corners of the rectangle can be used to determine its position relative to the x and y axes. In using YOLO, trust is calculated as Pr(object) × IOU, where Pr(object) represents the probability of an object's presence, and Intersection Over Union (IOU) represents the area of overlap between the predicted inference and the ground truth [27]. Where each cell network produces five predictions (x, y, w, h). In addition, each grid also produces conditional class probabilities, which can be written Pr(class|Object) [25]. It can be written in Eq. (1) as follows:

ClassI×IOUtruth pred =Classi∣Objek×IOUtruth pred (1)

With applying labeling procedures to each class. The application of the labeling procedure will be given Several marks on one image [28]. During the detection phase, only one class detector model is used, and each class label is associated with a separate training model. The return value of the bounding box labeling tool is the object coordinates (x1,y1,x2,y2). The coordinates of this item are different from the YOLO input values. In contrast, YOLO's input values are the center point, width, and height (x, y, w, h). As a result, the system must adjust the bounding box coordinates in the YOLO input format [29].

dw=1/W (2)

YOLOv8 [30] uses anchorless models with separate heads to handle object, classification and regression tasks independently. This design allows each branch to focus on its tasks and improves the overall accuracy of the model [31]. In the output layer of YOLOv8, they use the sigmoid function as the activation function for the objective score, which represents the probability that the bounding box contains an object. It uses the SoftMax function for class probabilities, by representing the probability that an object belongs to each possible class [22], which can be seen in Figure 2.

In the current architecture of the YOLOv8 model, shown in Figure 2, the same SPPF (Spatial Pyramid Pooling Fast) module from the YOLOv5 model is applied to the backbone of the YOLOv8 model. SPP is a well-known and widely used method in the field of computer vision for performing classifications [32].

Figure 2. YOLOv8 architecture

After that, SPP allows us to not only generate images from arbitrary sized photos for testing, but also feed images of various sizes and scales into the system during training. Aside from that, training by using variable-size photos increases the amount of variation in size and reduces overfitting. Additionally, SPP is particularly congenial to detecting objects [33].

3.1 Dataset preparation

Initial data collection to detect diseases that attack rice plant leaves begins by searching for a dataset of images of rice leaves affected by 3 types of disease and healthy rice leaves so that a total of 4 conditions of rice leaves must be available. The dataset utilized in this study was sourced from kaggle.com, specifically from the Rice Diseases Image Dataset [34]. It has 3,216 photographs, which are categorized into four distinct classes as outlined in Table 1.

Table 1. Data on the number of rice leaf samples

|

Class |

Total |

|

Brown Spot |

500 |

|

Healthy |

1.400 |

|

Hispa |

560 |

|

Leaf Blast |

756 |

Then the data is given a label using labelImg which functions to provide bounding boxes and labels on the image so that the model used can properly recognize the object being identified [35], as shown in Figure 3. LabelImg is a freely available graphical tool for image annotation, which facilitates the process of annotating items inside images for a range of computer vision applications, including but not limited to object detection [36]. The tool is known for its user-friendly interface and extensive adoption for generating annotations in the Pascal VOC format, which exhibits compatibility with numerous machine-learning frameworks, including YOLO. Annotating datasets for YOLO (You Only Look Once) object detection typically involves specifying objects' coordinates and class labels within images. YOLO uses a specific format for annotations that includes bounding box coordinates (center x, center y, width, height) and class labels for each object in an image. YOLO format for annotations used in research will be saved in a file with the extension TXT which contains information about Class ID, x, y, width (w), height (h) [37]. The process for getting the values of x, y, w, h uses a mathematical equation as follows:

x=x1+x22∗dw (3)

y=y1+y22∗dh (4)

w=(x2−x1)∗dw (5)

h=(y2−y1)∗dh (6)

Figure 3. Dataset annotation with LabelImg

3.2 Performance measurement

The YOLO model type is one of the many DL models that is currently only being implemented in automation for object detection. Apart from the YOLOmodel, there are still many types of training models used in training for object detection [38]. So the training model that will be applied in this experiment is YOLOv8 which is the latest model developed. For this purpose, YOLOv8n.pt and YOLOv8s.pt are used. The experiment was carried out using Google Colab Pro with GPU T4 16GB specifications and 32GB RAM, Tables 2 and 3 display the results of training with YOLOv8n and YOLOv8s over a total of 100 epochs.

Table 2. Training results YOLOv8n.pt

|

Class |

Image |

Instances |

P |

R |

mAP50 |

mAP95 |

|

All |

3.216 |

3920 |

0.879 |

0.892 |

0.944 |

0.661 |

|

Brown Spot |

3.216 |

743 |

0.868 |

0.864 |

0.926 |

0.53 |

|

Healthy |

3.216 |

1390 |

0.936 |

0.991 |

0.98 |

0.88 |

|

Hispa |

3.216 |

740 |

0.843 |

0.864 |

0.93 |

0.658 |

|

Leaf Blast |

3.216 |

1047 |

0.87 |

0.868 |

0.943 |

0.578 |

Table 3. Training results YOLOv8s.pt

|

Kelas |

Image |

Instances |

P |

R |

mAP50 |

mAP95 |

|

All |

3.216 |

3920 |

0.938 |

0.948 |

0.979 |

0.73 |

|

Brown Spot |

3.216 |

743 |

0.936 |

0.934 |

0.973 |

0.631 |

|

Healthy |

3.216 |

1390 |

0.961 |

0.994 |

0.99 |

0.899 |

|

Hispa |

3.216 |

740 |

0.916 |

0.918 |

0.971 |

0.728 |

|

Leaf Blast |

3.216 |

1047 |

0.941 |

0.948 |

0.983 |

0.664 |

Based on the findings presented in Table 2 and Table 3, it can be inferred that the YOLOv8s model outperforms the YOLOvn model. The YOLOv8s model demonstrates superior performance, as evidenced by its average values of mAP50 (0.979%), R (0.94%), and P (0.938%). In contrast, the YOLOv8n model exhibits comparatively lower performance, with mAP (0.944%), R (0.892%), and P (0.879%) values. The utilization of the YOLOv8s model on the rice leaf disease dataset yielded optimal outcomes, as seen by the results obtained in this experimental study. The experimental results gained from testing the dataset are presented in Tables 2 and Table 3. These results were obtained through training for a total of 100 epochs. The algorithm itself is needed to calculate the values of Precision(P), Recall, and F1 [39] can be done with the Eqs. (7)-(9).

P=TPTP+FP (7)

R=TPTP+FP (8)

F1=2×P×RP+R (9)

Meanwhile, in determining mAP, it is determined by the Average Precision (AP) value for all classes in the dataset. AP for each class is calculated as the area under the precision-recall curve, which is generated by varying the threshold for object detection and calculating the precision and recall of each object [40]. A higher mAP score indicates better model performance in localizing and classifying objects in the image [41].

The AP value is generated by calculating the curve-shaped area at the recall value, which is when the maximum precision value falls. The AP value can be calculated using the Eq. (10) [42]:

AP=Σ(rn+1−rn)Pinter (rn+1) (10)

Then to calculate the mAP value, it can be calculated using the Eq. (11):

mAP=∑Ni=1AP(i)N×100% (11)

AP is the average accuracy and N is the number of layers trained. Apart from that, in calculating performance it is quite difficult to compare models with high precision values and low recall values or vice versa. Therefore, it is necessary to calculate the performance of other parameters to achieve harmonious values between precision and recall, especially the F1 Score [40, 43].

3.3 Experiment result

As can be seen in Figure 4, the findings of the trials that were conducted using the YOLOv8s model indicate that the average value for all classes F1 is 94%. The core architecture of YOLOv8 is extremely similar to that of YOLOv5, with the exception of the switch from the C3 module to the C2f module. The idea of Constraint Satisfaction Problems (CSP) serves as the foundation for this section of the course [44].

Figure 4. F1 chart

YOLOv8 brings significant improvements compared to previous versions such as YOLOv7 and previous architectures. YOLOv8 uses a more advanced model architecture with a deeper and more complex backbone to improve feature extraction as well as improvements to the head and neck architecture for better object detection at multiple scales. This model is faster in terms of inference thanks to better optimization and the use of the latest computing technologies, reducing latency and increasing throughput, making it more suitable for real-time applications. Additionally, YOLOv8 improves the quality of object detection through the introduction of anchor-free detection, which allows the model to be more flexible in detecting objects at various scales and locations without reliance on predefined anchor boxes. Better training algorithms, including more sophisticated data augmentation techniques and improved regularization methods, also contribute to increased accuracy.

The integration was carried out to improve the gradient flow information provided by YOLOv8 without compromising its lightweight design or any other feature. This was accomplished by ensuring that no aspect was sacrificed in the process. During the last phase of the backbone design, the SPPF module, which previously held a position of preeminence, was utilized in an exclusive capacity. After that, the implementation of three wax pools, each measuring five inches by five inches, was carried out successively. After that, the outputs of each layer were combined to obtain precise identification of objects at various scales while also keeping a streamlined and efficient structure. This was accomplished by merging the outputs of each layer. The goal was accomplished without sacrificing the accuracy required for its completion.

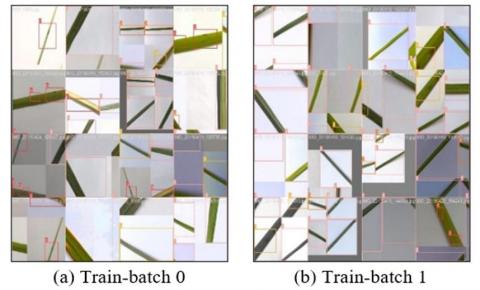

Using the YOLOv8s model to detect disease on rice leaves, after looking at the training results obtained then visualizing the input image and then giving it a label. The output results from this experiment can be seen in images that have been detected but have not been given a label name, as shown in Figure 5 will then be given a class name label as shown in Figure 6.

Figure 5. Train batch image

Figure 6. Prediction batch 0 and 1

In experiments that have been carried out using the YOLOv8s model, the output results in detecting dataset images are quite precise and clear, this is because the YOLOv8 model applies Non-Maximum Suppression (NMS). NMS has been an important part of computer vision for decades, as it is widely used in edge detection, point feature detection, and object detection [45]. By using NMS to focus on bounding boxes that have higher scores compared to surrounding ones that have lower scores to overcome the overlap between high bounding boxes. This is also the reason NMS is becoming frequently used in computer vision [45, 46]. For more details regarding experiments with YOLOv8s, see Figure 7.

Figure 7. Performance YOLOv8s

Experiments have been run with the YOLOv8s model, making use of the rice leaf disease dataset [34], and the outcomes have been arranged in Table 4 to reflect what was discovered. This particular collection of data is broken down into four categories: hispa, healthy, leaf blast, and brown spot. Taking into consideration the performance that was demonstrated by the YOLOv8s model, we can see that it achieved the greatest possible mAP values, with all class equaling 97%, hispa equaling 97.1%, Healthy equaling 99%, leaf blast equaling 94.8%, and dan brown spot equaling 97.3%.

Table 4. Testing performance with rice leaf disease dataset

|

Model |

Class |

Image |

Instances |

P |

R |

mAP50 |

|

YOLOv8-n |

All |

3.216 |

3920 |

0.879 |

0.892 |

0.944 |

|

Brown Spot |

3.216 |

743 |

0.868 |

0.864 |

0.926 |

|

|

Healthy |

3.216 |

1390 |

0.936 |

0.991 |

0.98 |

|

|

Hispa |

3.216 |

740 |

0.843 |

0.864 |

0.93 |

|

|

Leaf Blast |

3.216 |

1047 |

0.87 |

0.868 |

0.943 |

|

|

YOLOv8-s |

All |

3.216 |

3920 |

0.938 |

0.948 |

0.979 |

|

Brown Spot |

3.216 |

743 |

0.936 |

0.934 |

0.973 |

|

|

Healthy |

3.216 |

1390 |

0.961 |

0.994 |

0.99 |

|

|

Hispa |

3.216 |

740 |

0.916 |

0.918 |

0.971 |

|

|

Leaf Blast |

3.216 |

1047 |

0.941 |

0.948 |

0.983 |

This shows that deep learning-based object detection models can adapt well in dynamic agricultural environments, coping with high variability in lighting, viewing angles, and weather conditions. The effectiveness of transfer learning in adapting models trained on general datasets to specific tasks with small datasets is also confirmed, reducing the need for large training data. The model's strong generalization capabilities enable accurate detection across a wide range of field conditions, while data augmentation techniques prove critical for improving model robustness and accuracy. This success also supports the integration of deep learning with IoT technology, creating intelligent solutions for real-time monitoring and decision making in the field. In addition, this research strengthens the theory of developing efficient energy-saving algorithms for IoT devices with limited power, as well as improving human-machine interaction in agriculture through a reliable automatic detection system.

By looking at the level of average precision (AP) in Table 4 with the YOLOv8 model in the Rice Disease image dataset, it can be said that the model used in this research is good at identifying and classifying rice plant diseases. Then, when compared with several previous studies that have been carried out either with datasets collected by ourselves or using the same dataset as this study, it can be shown in Table 5 that the YOLOv8 model used can outperform several deep learning models that carry out research on rice plant diseases.

Table 5. Previous research comparison

|

Reference |

Dataset |

Methodology |

Classification |

Detection |

Result AP (%) |

|

[47] |

Bangladesh at Feni |

YOLOv5 |

Yes |

Yes |

80% |

|

[38] |

Rice Diseases Image Dataset |

YOLOlv4-Tinny |

Yes |

No |

80% |

|

[17] |

Tiled Image of Eight Rice diseases |

YOLOv4 |

Yes |

Yes |

91.14% |

|

[4] |

Rice Diseases Image Dataset |

ADSNN-BO |

Yes |

Yes |

94.65% |

|

Proposed method |

Rice Diseases Image Dataset |

YOLOv8 |

Yes |

Yes |

97.9% |

Application of YOLOv8 in real-world agricultural environments has significant practical implications. This technology enables early and accurate detection of rice plant diseases, which reduces the risk of disease spread and minimizes crop yield losses. With real-time detection through integration with IoT devices, farmers can receive quick alerts and take immediate corrective action, increasing farmland management efficiency. In addition, the application of YOLOv8 can reduce the overuse of pesticides, as correct diagnosis allows more targeted use of chemicals, reducing negative environmental impacts. From a cost perspective, although the initial investment in this technology may be high, the resulting efficiencies can lower long-term operational costs. This technology also has high scalability, enabling its use at various agricultural scales, from small to large. However, challenges such as the need for adequate technological infrastructure and training for farmers to operate these systems must be addressed to ensure widespread and successful adoption. Overall, YOLOv8 offers great potential to improve productivity, crop quality and sustainability of agricultural practices.

The advantage of using the YOLOv8 model, which is one of the newest Deep Learning models published by ultralytics. YOLOv8 is claimed to have many changes made to beat other deep learning models in an architecture that is fast in detecting objects both in real-time and using datasets. YOLOv8 can go even further in detecting objects and segments in images while maintaining a high level of precision in analyzing that element. Furthermore, thanks to the architectural integration provided by YOLOv8, it is now possible to execute a wide variety of image segmentation tasks with just one model. This to increase the usability of YOLOv8 can be Integration with IoT devices in smart farming solutions has great potential to increase efficiency and productivity. YOLOv8 can be used for real-time monitoring of crops and pests, more efficient land management, and increasing crop productivity. Technically, it is important to ensure IoT device compatibility with YOLOv8, a reliable network for data transfer, adequate data processing infrastructure, and energy efficiency. From a logistics perspective, it requires planning the placement and maintenance of equipment, scalability considerations, training and technical support for farmers, as well as strong data security. By paying attention to these aspects, this integration can run smoothly and provide significant benefits for the agricultural sector.

Object recognition, instance segmentation, and image classification are examples of tasks that fall into this category. This flexibility plays a particularly important role in applications that require the completion of multiple activities, such as video surveillance and image search. These applications require the implementation of various tasks. As another example, this also applies in the context of autonomous vehicle use.

This article introduces and explores the results of a study of CNN algorithms designed for object identification, specifically focusing on YOLOv8n and YOLOv8s, created by YOLOLabs. According to our research findings, the YOLOv8s algorithm stands out for its incredible precision, surpassing any existing alternative. From the experiments that have been carried out, it can be said that the YOLOV8 model still has many possibilities to be developed and applied in various fields and environments to help problems that exist in the surrounding area.

Based on the outcomes of the training conducted using the YOLOv8 model utilizing the Rice Disease Image Dataset, the mean Average Precision (mAP) scores for each class were as follows: hispa - 97.1%, leaf blast - 98.3%, brown spot - 97.3%, and healthy - 99%. The overall mAP value for all classes combined was 97%. The utilization of the YOLOv8s method in several application scenarios, particularly those on object detection, carries significant ramifications. The exceptional precision exhibited by YOLOv8 has substantial prospects for advancements in various domains, including security surveillance, medical image analysis, and industrial automation. Furthermore, the algorithm's adaptability and exceptional efficiency present opportunities for its use in many novel solutions beyond the realm of object detection. This study emphasizes the pressing need and long-term benefits of incorporating technology, specifically YOLOv8, within the agricultural industry, particularly for addressing diseases that affect rice crops. By leveraging advancements in information technology and visual computing, this approach enables proactive measures to mitigate crop failure caused by diseases.

The high accuracy of YOLOv8 in detecting rice diseases enables early detection and more effective control, reducing the risk of disease spread and increasing crop yields. This means that farmers can save costs by reducing unnecessary pesticide use and focusing on more precise preventive measures. Additionally, YOLOv8's ability to operate in real-time allows integration with IoT devices for continuous monitoring, providing quick alerts to farmers and enabling faster responses to disease threats. The outstanding precision demonstrated by YOLOv8 also holds great promise for advancements in various other fields. In security surveillance, YOLOv8 can be used for rapid and accurate detection of potential threats, enhancing safety and security in various environments. In medical image analysis, the high-precision detection capability can aid in early disease diagnosis and treatment, improving health outcomes for patients. In industrial automation, YOLOv8 can improve efficiency by automating inspection processes and quality control, reducing human errors and increasing productivity.

Overall, the implementation of YOLOv8 in various application scenarios demonstrates significant potential for technological advancements and operational efficiency in various sectors. The success of this research confirms that the improvements made to YOLOv8 are not only relevant but also have a significant impact, providing intelligent and efficient solutions to complex object detection challenges across various fields.

[1] Sato, H., Ando, I., Hirabayashi, H., et al. (2008). QTL analysis of brown spot resistance in rice (Oryza sativa L.). Breeding Science, 58(1): 93-96. https://doi.org/10.1270/jsbbs.58.93

[2] Haque, M.E., Rahman, A., Junaeid, I., Hoque, S.U., Paul, M. (2022). Rice leaf disease classification and detection using YOLOv5. arXiv preprint arXiv:2209.01579. https://doi.org/10.48550/arXiv.2209.01579

[3] Alfarisi, M.S., Bintang, C.A., Ayatillah, S. (2018). EXSYS (drone security with audio and expert system) village for exploiting birds and identifying rights and rice diseases keeping food security and enhancing food development in Indonesia. Journal of Applied Agricultural Science and Technology, 2: 35-50.

[4] Wang, Y., Wang, H., Peng, Z. (2021). Rice diseases detection and classification using attention based neural network and Bayesian optimization. Expert Systems with Applications, 178: 114770. https://doi.org/10.1016/j.eswa.2021.114770

[5] Kusanti, J., Haris, N.A. (2018). Klasifikasi Penyakit daun padi berdasarkan hasil ekstraksi fitur GLCM interval 4 sudut. Jurnal Informatika: Jurnal Pengembangan IT, 3(1): 1-6. https://doi.org/10.30591/jpit.v3i1.669

[6] Phadikar, S., Sil, J., Das, A.K. (2012). Classification of rice leaf diseases based on morphological changes. International Journal of Information and Electronics Engineering, 2(3): 460-463. http://doi.org/10.7763/IJIEE.2012.V2.137

[7] Khoiruddin, M., Junaidi, A., Saputra, W.A. (2022). Klasifikasi penyakit daun padi menggunakan convolutional neural network. Journal of Dinda: Data Science, Information Technology, and Data Analytics, 2(1): 37-45. https://doi.org/10.20895/dinda.v2i1.341

[8] Zahrah, S., Saptono, R., Suryani, E. (2016). Identifikasi gejala penyakit padi menggunakan operasi morfologi Citra. In Seminar Nasional Ilmu Komputer (SNIK 2016)-Semarang, pp. 100-106.

[9] Ghosh, A., Sufian, A., Sultana, F., Chakrabarti, A., De, D. (2020). Fundamental concepts of convolutional neural network. In Recent Trends and Advances in Artificial Intelligence and Internet of Things, pp. 519-567. https://doi.org/10.1007/978-3-030-32644-9_36

[10] Umam, C., Handoko, L. B. (2020). Convolutional neural network (CNN) untuk identifkasi karakter hiragana. In Prosiding Seminar Nasional Lppm Ump, pp. 527-533.

[11] Wang, J., Wu, Q. J., Zhang, N. (2024). You only look at once for real-time and generic multi-task. IEEE Transactions on Vehicular Technology, 73(9): 12625-12637. https://doi.org/10.1109/TVT.2024.3394350

[12] Lu, L. (2023). Improved YOLOv8 detection algorithm in X-ray contraband. In Advances in Artificial Intelligence and Machine Learning, 3(3): 72.

[13] Costales, H., Callejo-Arruejo, A., Rafanan, N. (2023). Development of a prototype application for rice disease detection using convolutional neural networks. arXiv preprint arXiv:2301.05528. https://doi.org/10.48550/arXiv.2301.05528

[14] Anwar, M., Kristian, Y., Setyati, E. (2023). Klasifikasi penyakit tanaman cabai rawit dilengkapi dengan segmentasi citra daun dan buah menggunakan YOLOv7. Journal of Information Technology and Computer Science, 6(1): 540-548. https://doi.org/10.31539/intecoms.v6i1.6071

[15] Arshed, M.A., Mumtaz, S., Ibrahim, M., Dewi, C., Tanveer, M., Ahmed, S. (2024). Multiclass AI-generated deepfake face detection using patch-wise deep learning model. Computers, 13(1): 31. https://doi.org/10.3390/computers13010031

[16] Borhani, Y., Khoramdel, J., Najafi, E. (2022). A deep learning based approach for automated plant disease classification using vision transformer. Scientific Reports, 12(1): 11554. https://doi.org/10.1038/s41598-022-15163-0

[17] Kiratiratanapruk, K., Temniranrat, P., Sinthupinyo, W., Marukatat, S., Patarapuwadol, S. (2022). Automatic detection of rice disease in images of various leaf sizes. arXiv preprint arXiv:2206.07344. https://doi.org/10.48550/arXiv.2206.07344

[18] Mustofa, S., Munna, M.M.H., Emon, Y.R., Rabbany, G., Ahad, M.T. (2023). A comprehensive review on plant leaf disease detection using deep learning. arXiv preprint arXiv:2308.14087. https://doi.org/10.48550/arXiv.2308.14087

[19] Redmon, J., Divvala, S., Girshick, R., Farhadi, A. (2016). You only look once: Unified, real-time object detection. In 2016 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Las Vegas, NV, USA, pp. 779-788. https://doi.org/10.1109/CVPR.2016.91

[20] Terven J.R. Cordove-Esparza, D.M. (2023). A comprehensive review of YOLO: From YOLOv1 and beyond. arXiv preprint arXiv:2304.00501v1. https://doi.org/10.48550/arXiv.2304.00501

[21] Ahmed, N.S., Noor, S.S., Sikder, A.I.S., Paul, A. (2023). A post-processing based Bengali document layout analysis with YOLOv8. arXiv preprint arXiv:2309.00848. https://doi.org/10.48550/arXiv.2309.00848

[22] Patel, S.H., Kamdar, D. (2023). Accurate ball detection in field hockey videos using YOLOV8 algorithm. International Journal of Advance Research, Ideas and Innovations in Technology, 9(2): 411-418. https://www.ijariit.com/manuscripts/v9i2/V9I2-1305.pdf.

[23] Mazen, F.M.A., Seoud, R.A.A., Shaker, Y.O. (2023). Deep learning for automatic defect detection in PV modules using electroluminescence images. IEEE Access, 11: 57783-57795. https://doi.org/10.1109/ACCESS.2023.3284043

[24] Kang, M., Ting, C.M., Ting, F.F., Phan, R.C.W. (2023). BGF-YOLO: Enhanced YOLOv8 with multiscale attentional feature fusion for brain tumor detection. arXiv preprint arXiv:2309.12585. https://doi.org/10.48550/arXiv.2309.12585

[25] Sharma, N., Baral, S., Paing, M.P., Chawuthai, R. (2023). Parking time violation tracking using YOLOv8 and tracking algorithms. Sensors, 23(13): 5843. https://doi.org/10.3390/s23135843

[26] Hemmatirad, K., Babaie, M., Hodgin, J., Pantanowitz, L., Tizhoosh, H.R. (2023). An investigation into glomeruli detection in kidney H&E and PAS images using YOLO. arXiv preprint arXiv:2307.13199. https://doi.org/10.48550/arXiv.2307.13199

[27] Ćorović, A., Ilić, V., Ðurić, S., Marijan, M., Pavković, B. (2018). The real-time detection of traffic participants using YOLO algorithm. In 2018 26th Telecommunications Forum (TELFOR), Belgrade, Serbia, pp. 1-4. https://doi.org/10.1109/TELFOR.2018.8611986

[28] Chen, R.C., Dewi, C., Zhuang, Y.C., Chen, J.K. (2023). Contrast limited adaptive histogram equalization for recognizing road marking at night based on YOLO models. IEEE Access, 11: 92926-92942. https://doi.org/10.1109/ACCESS.2023.3309410

[29] Dewi, C., Chen, R.C., Zhuang, Y.C., Jiang, X., Yu, H. (2023). Recognizing road surface traffic signs based on YOLO models considering image flips. Big Data and Cognitive Computing, 7(1): 54. https://doi.org/10.3390/bdcc7010054

[30] Utralytics, GitHub. https://github.com/ultralytics/ultralytics.

[31] Dewi, C., Chen, A.P.S., Christanto, H.J. (2023). YOLOv7 for face mask identification based on deep learning. In 2023 15th International Conference on Computer and Automation Engineering (ICCAE): Sydney, Australia, pp. 193-197. https://doi.org/10.1109/ICCAE56788.2023.10111427

[32] Tai, S.K., Dewi, C., Chen, R.C., Liu, Y.T., Jiang, X., Yu, H. (2020). Deep learning for traffic sign recognition based on spatial pyramid pooling with scale analysis. Applied Sciences, 10(19): 6997. https://doi.org/10.3390/app10196997

[33] Dewi, C., Chen, R.C. (2022). Combination of resnet and spatial pyramid pooling for musical instrument identification. Cybernetics and Information Technologies, 22(1): 104-116. https://doi.org/10.2478/cait-2022-0007

[34] Rice Diseases Image Dataset. https://www.kaggle.com/datasets/minhhuy2810/rice-diseases-image-dataset.

[35] Hasan, N.F. (2023). Deteksi dan klasifikasi penyakit pada daun kopi menggunakan YOLOv7. Jurnal Sisfokom (Sistem Informasi Dan Komputer), 12(1): 30-35. https://doi.org/10.32736/sisfokom.v12i1.1545

[36] Dewi, C., Christanto, H.J. (2023). Automatic medical face mask recognition for COVID-19 mitigation: Utilizing YOLOV5 object detection. Revue d'Intelligence Artificielle, 37(3): 627-638. https://doi.org/10.18280/ria.370312

[37] Dharneeshkar, J., Aniruthan, S.A., Karthika, R., Parameswaran, L. (2020). Deep learning based detection of potholes in Indian roads using YOLO. In 2020 International Conference on Inventive Computation Technologies (ICICT), Coimbatore, India, pp. 381-385. https://doi.org/10.1109/ICICT48043.2020.9112424

[38] Agustin, M., Hermawan, I., Arnaldy, D., Muharram, A. T., Warsuta, B. (2023). Design of livestream video system and classification of rice disease. International Journal on Informatics Visualization, 7(1): 139-145. https://doi.org/10.30630/joiv.7.1.1336

[39] Nguyen, K.D., Phung, T.H., Cao, H.G. (2023). A SAM-based solution for hierarchical panoptic segmentation of crops and weeds competition. arXiv preprint arXiv:2309.13578. https://doi.org/10.48550/arXiv.2309.13578

[40] Du, H. (2023). General object detection algorithm YOLOv5 comparison and improvement. Doctoral dissertation, California State University, Northridge.

[41] Reis, D., Kupec, J., Hong, J., Daoudi, A. (2023). Real-time flying object detection with YOLOv8. arXiv preprint arXiv:2305.09972. https://doi.org/10.48550/arXiv.2305.09972

[42] Harun, A., Kharisma, O.B. (2023). Implementasi deep learning menggunakan metode you only look once untuk mendeteksi rokok. Jurnal Media Informatika Budidarma, 7(1): 107-116.

[43] Pratiwi, N.K.C., Ibrahim, N., Fuâ, Y.N., Rizal, S. (2021). Deteksi parasit plasmodium pada citra mikroskopis hapusan darah dengan metode deep learning. ELKOMIKA: Jurnal Teknik Energi Elektrik, Teknik Telekomunikasi, & Teknik Elektronika, 9(2): 306-317. https://doi.org/10.26760/elkomika.v9i2.306

[44] Dewi, C., Chen, A.P.S., Christanto, H.J. (2023). Recognizing similar musical instruments with YOLO models. Big Data and Cognitive Computing, 7(2): 94. https://doi.org/10.3390/bdcc7020094

[45] He, Y., Zhu, C., Wang, J., Savvides, M., Zhang, X. (2019). Bounding box regression with uncertainty for accurate object detection. In 2019 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Long Beach, CA, USA, pp. 2883-2892. https://doi.org/10.1109/CVPR.2019.00300

[46] Morbekar, A., Parihar, A., Jadhav, R. (2020). Crop disease detection using YOLO. In 2020 International Conference for Emerging Technology (INCET), Belgaum, India, pp. 1-5. https://doi.org/10.1109/INCET49848.2020.9153986

[47] Jiang, L., Liu, H., Zhu, H., Zhang, G. (2022). Improved YOLO v5 with balanced feature pyramid and attention module for traffic sign detection. In 2021 International Conference on Physics, Computing and Mathematical (ICPCM2021), pp. 1-7. https://doi.org/10.1051/matecconf/202235503023